What Is New in ARKit 2

- Overview

- New Features in ARKit 2

- Some other WWDC Sessions Related to AR

- Some other materials for a better AR app:

- Building Your First AR Experience

- Managing Session Lifecycle and Tracking Quality

- Human Interface Guidelines - Augmented Reality

- Handling 3D Interaction and UI Controls in Augmented Reality

- Creating a Multiuser AR Experience

- SwiftShot: Creating a Game for Augmented Reality

- Recognizing Images in an AR Experience

- Scanning and Detecting 3D Objects

Overview

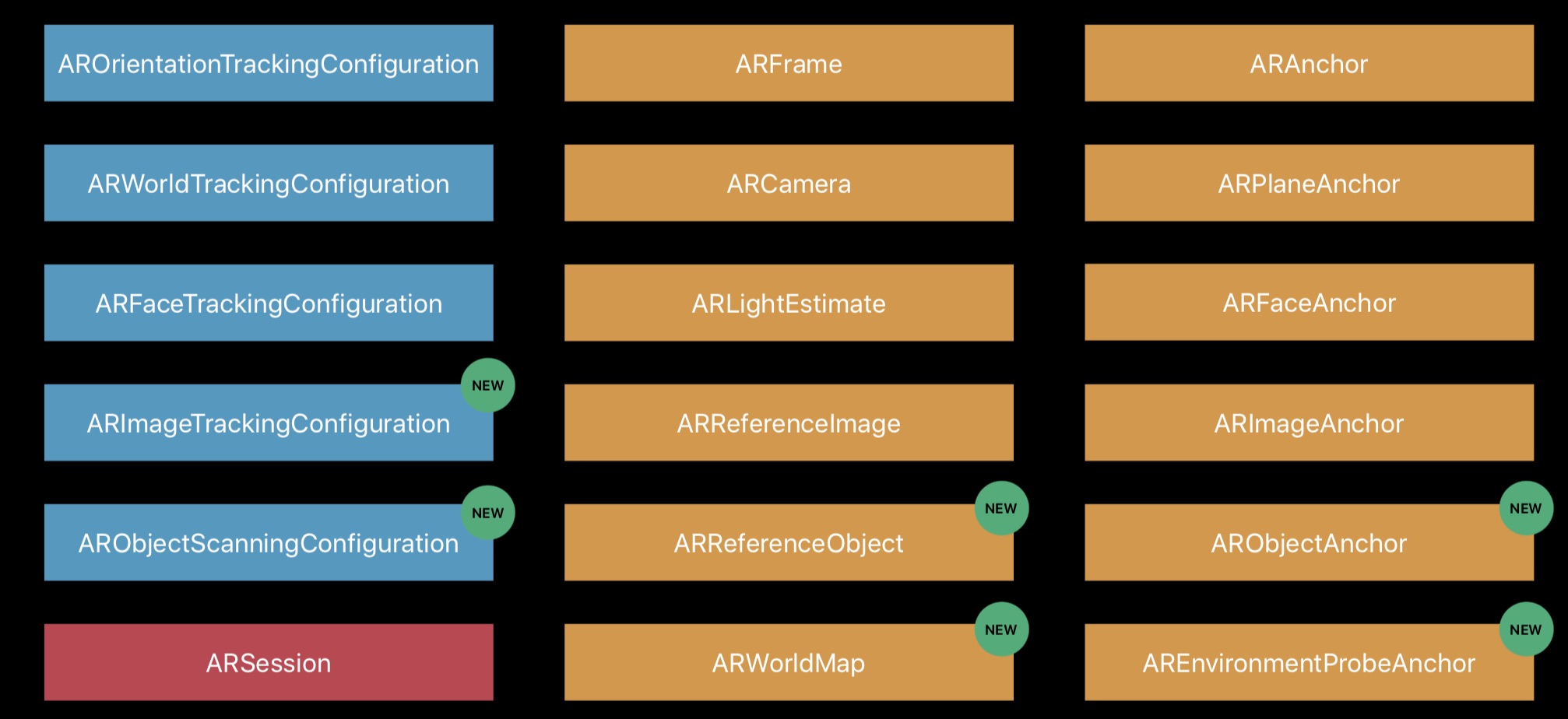

In ARKit 1, we have:

- Device positioning from world tracking process

- Horizontal and vertical plane detection from world tracking process

- Lighting estimation

- AR face tracking

In ARKit 2, we have:

- Saving and loading maps

- Environment Texturing

- Image detection and tracking

- 3D object tracking

- Improved face tracking

New Features in ARKit 2

Saving and Loading Maps

World Tracking Recap:

- Position and orientation of the device.

- Physical scale in the scene.

- 3D feature points.

- Relocalization (iOS 11.3): we can relocalize objects when your AR session is interrupted, like phone coming or going from background. This feature is implemented by storing the mapping

ARWorldMapbetween real world and the coordinate system. However the mapping is not exposed to developers.

World Tracking Enhancement:

- Saving and loading maps: expose the

ARWorldMapto developers. - Faster initialization and plane detection

- Robust tracking and plane detection

- More accurate extent and boundary Continuous autofocus

- New 4:3 video formats (iPad is also 4:3)

Saving and loading maps:

ARWorldmap contains:

- Mapping of physical 3D space: for representing 3D feature points in the coordinate system.

- Mutable list of named anchors: for restoring previous 3D environment (like lighting node anchor), and relocalizing previously added virtual objects.

- Raw feature points and extent: for debugging and visualization.

- Serialization: for storing and recovering from an file.

We can use the map in two different ways:

- Persistent: Restore previous AR scene for a new AR session. For example, you go to another room and come back or close the AR app and open it some time later.

- Multiuser experience: We can share the map among devices through WiFi or bluetooth.

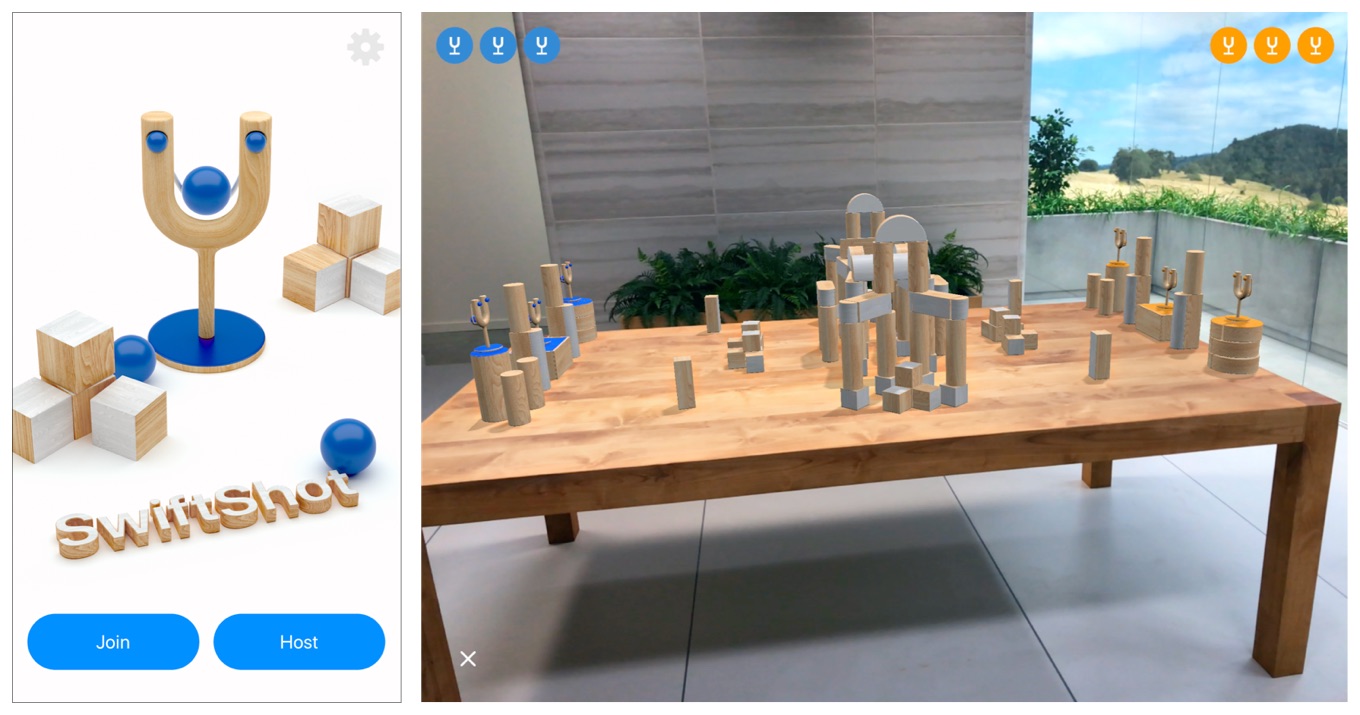

The SwiftShot is an multiuser experience AR game:

and the following is a small piece of the demo:

How to get a good map

In order to share or restore the map, we need to get a good one first. A good map should be:

- Multiple points of view: If we record the mapping from one point of view, and try to restore the coordinate system from another point of view, it will fail.

- Static, well-textured environment.

- Dense feature points on the map.

We can use the WorldMappingStatus status from ARFrame to decide if the current map is good enough for sharing or storing:

1 2 3 4 5 6 | |

Environment Texturing

With the help of Environment Texturing, AR scene objects can reflect the environment texture on the surface of themselves, just like:

Image Tracking

Moving objects can not be positioned in ARKit 1. In ARKit 2, specified images can be tracked in AR scene.

![]()

The classes in ARKit 2 for image tracking are:

![]()

The detected ARImageAnchors have properties like:

1 2 3 4 5 | |

The specified image should:

- Histogram should be broad

- Not have multiple uniform color regions

- Not have repeated structures

The following is the demo:

![]()

The inputs of the above demo are:

- an static image of the cat, the same as it is in the picture frame

- an video of the cat

The video is played at the position of the specified picture frame, with the same orientation of the picture frame.

There are two classes related to image tracking:

| ARImageTrackingConfiguration | ARWorldTrackingConfiguration |

|---|---|

| Has No World Origin | Has World Origin |

| After detecting the image, only do things inside the place of the image. | After detecting the image, place some virtual objects outside the detected image plane. |

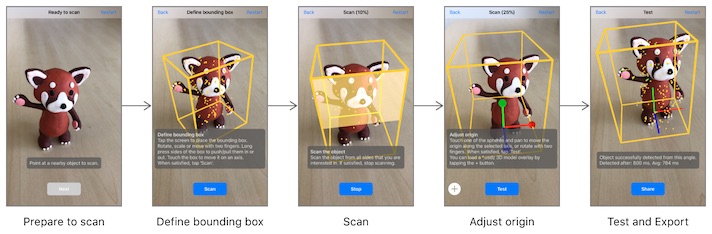

3D Object Detection

3D object detection workflow is:

![]()

The ARObjectAnchor contains properties like:

1 2 3 4 | |

and ARReferenceObject is the scanned 3D object:

1 2 3 4 5 6 7 | |

An

ARReferenceObjectcontains only the spatial feature information needed for ARKit to recognize the real-world object, and is not a displayable 3D reconstruction of that object.

In order to get the ARReferenceObject, we should scan the real object, and store the result as an file (.arobject) or an xcode asset catalog for ARKit to use. Fortunately, Apple supplies a demo for scanning 3D object to get the ARReferenceObject. Refer to: Scanning and Detecting 3D Objects for detail and the rough steps of object scanning are:

For scanned object in the real world, we can dynamically add some info around it (Museum is a good use case.), like the demo does:

![]()

Face Tracking Enhancements

With face tracking, we can place something on it or around it.

Enhancements in ARKit 2:

- Gaze tracking

- Tongue support

Gaze and Tongue can be input of the AR app.

New changes in one screenshot:

Some other WWDC Sessions Related to AR

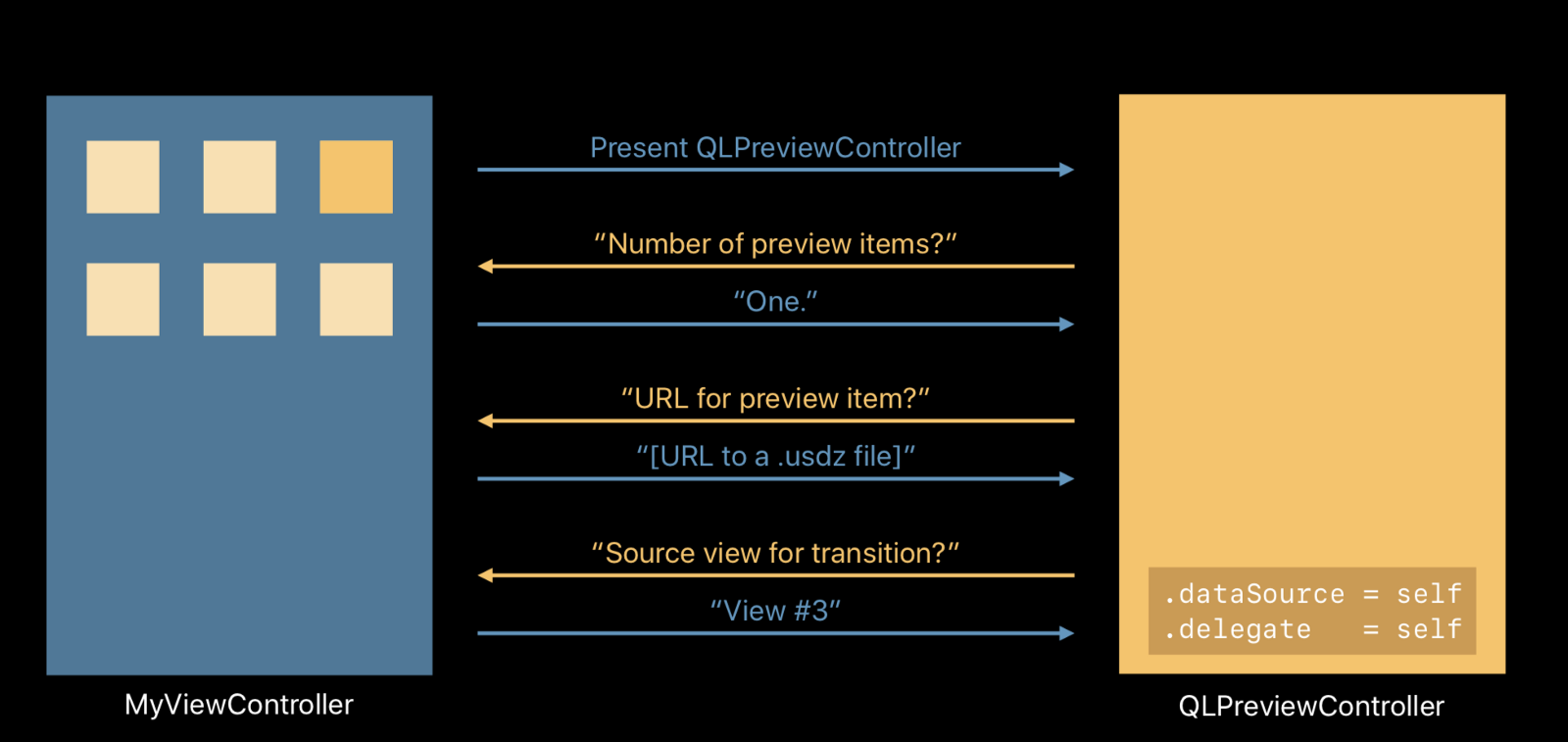

Integrating Apps and Content with AR Quick Look

A deeper dive into a new feature in iOS that provides a way to preview any AR object from a USDZ file.

- There’s a great sequence diagram presented (see above) (I wish more sessions would have these!) for previewing USDZ objects, of which the

QLPreviewControllerplays a central role. - For web developers, it covers HTML samples for how to preview USDZ objects in Safari.

- Then it goes into a deep dive on how to create the actual USDZ objects, with more examples on new AR texturing capabilities.

- There’s also a quick overview on how to optimize the files, to keep the size down, and there’s a breakdown of the files that make up the USDZ format.

Inside SwiftShot: Creating an AR Game

Covers world map sharing, networking, and the physics of how to build an AR game, as well as some design insight (I have limited game dev experience so I’ll do the best I can below).

- Pointers to remember with designing an AR game, such as “encouraging” the user to slowly move the device for world mapping!

- It demonstrates the usage of image & object detection, world map sharing, and iBeacons for the game.

- Integrating

ARKitwithSceneKitandMetal, including the translation of physics data between each — position, velocity, and orientation. - Performance enhancement with the

BitStreamCodableprotocol. - A small look at how audio was integrated into the game.

Creating Great AR Experiences

Best practises mainly from a UX & design perspective (there are no code samples in this session).

- Logical dos and don’ts that may be useful, if you need help with thought towards product and empathy towards the user.

- They emphasize the importance of using transitions between AR scapes.

- Why AR is a special combination of touch and movement.

- They advise that minimal battery impact should be a huge focus! This is a challenge, given that they recommend to render the FPS at 60 to avoid latency.

- There’s a lengthy demonstration of creating an AR fireplace, with complex texturing, etc. It looks great, but unfortunately there were no coding samples accompanying the demo.

Understanding ARKit Tracking and Detection

A good broad overview of all of the main AR concepts.

- This is such a good intro into not only AR on iOS, but AR in general, that it should have been part of 2017’s sessions when ARKit was first introduced. Better late than never. If you’re only going to watch one session, watch this one!

- It recaps the main features of ARKit — orientation, world tracking, and plane detection, and demos all of these in depth with coding samples.

- It then demos the new features of ARKit 2 — shared world mapping, image tracking, and object detection (which has been available in the Vision framework recapped above, but is now also accessible in ARKit).

- A good explanation on a core AR principle, Visual Inertial Odometry, is given. Short of going into the actual physics equations behind it, this should give you a great understanding of VIO.

Some other materials for a better AR app:

Building Your First AR Experience

This document demos an app for basic usage of ARKit.

Managing Session Lifecycle and Tracking Quality

Make your AR experience more robust by

- providing clear feedback, using

ARCamera.TrackingState. - recovering from interruptions, using

ARCamera.TrackingState.Reason.relocalizing. - resuming previous sessions, using

ARWorldMap.

Human Interface Guidelines - Augmented Reality

This post describes how to rendering virtual objects, how to interact with virtual objects, how to handling interruptions. It is for UX.

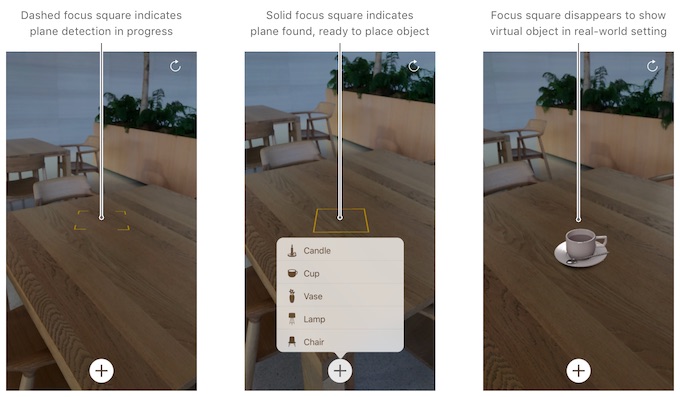

Handling 3D Interaction and UI Controls in Augmented Reality

This document describes the best practices for visual feedback, gesture interactions, and realistic rendering in AR experiences. And a demo app is supplied.

Creating a Multiuser AR Experience

This document demos an app (with source code) on how to transmit ARKit world-mapping data between nearby devices with the MultipeerConnectivity framework (introduced in iOS 7.0) to create a shared basis for AR experiences. MultipeerConnectivity supports peer-to-peer connectivity and the discovery of nearby devices. With MultipeerConnectivity, you can not only share ARWorldMap, but also some actions. This makes multiuser AR game possible.

However:

- Recording and transmitting a world map and relocalizing to a world map are time-consuming, bandwidth-intensive operations. A good design is needed for better performance.

- The persons received the world map data need to move their device so they see a similar perspective (also sent by the host) helps ARKit process the received map and establish a shared frame of reference for the multiuser experience.

SwiftShot: Creating a Game for Augmented Reality

This document demos the SwiftShot game shown on WWDC 2018, including:

- Designing Gameplay for AR

- Using Local Multipeer Networking and Sharing World Maps

- Synchronizing Gameplay Actions

- Solving Multiplayer Physics

Recognizing Images in an AR Experience

Detect known 2D images in the user’s environment, and use their positions to place AR content.

Scanning and Detecting 3D Objects

Record spatial features of real-world objects, then use the results to find those objects in the user’s environment and trigger AR content.