ARKit Usage

autoauto- Cool AR Apps in App Storeauto - World Brushauto - IKEA Placeauto - AR MeasureKitauto- Requirementauto- ARKit Usageauto - ARKit Related Techniquesauto - ARKit in iOS Systemauto - ARKit Key Classesauto - ARSessionauto - ARConfigurationauto - More on ARWorldTrackingConfigurationauto - Tracking Qualityauto - ARFrameauto - HitTest for Real World Positionauto - existingPlaneauto - existingPlaneUsingExtentauto - estimatedHorizontalPlaneauto - featurePointauto - Display Virtual Object in Real Worldauto - Standard Viewauto - Custom Viewauto- Best Practices and Limitationsauto - Best Practicesauto - Limitationsauto- AR from Googleautoauto

Cool AR Apps in App Store

World Brush

World Brush is an AR experience where users can paint with brushes on the world around them. Every painting is saved at the approximate GPS location where it was created, and will be recommended to the user around.

IKEA Place

IKEA Place lets you virtually ‘place’ IKEA products in your space.

AR MeasureKit

AR MeasureKit makes it really easy to measure different things in the world using your iPhone’s or iPad’s camera.

Requirement

- iOS 11 and above system.

- iOS device with an A9 or later processor.

To make your app available only on devices supporting ARKit, use the arkit key in the UIRequiredDeviceCapabilities section of your app’s Info.plist. If augmented reality is a secondary feature of your app, use the ARWorldTrackingSessionConfiguration.isSupported property to determine whether the current device supports the session configuration you want to use.

ARKit Usage

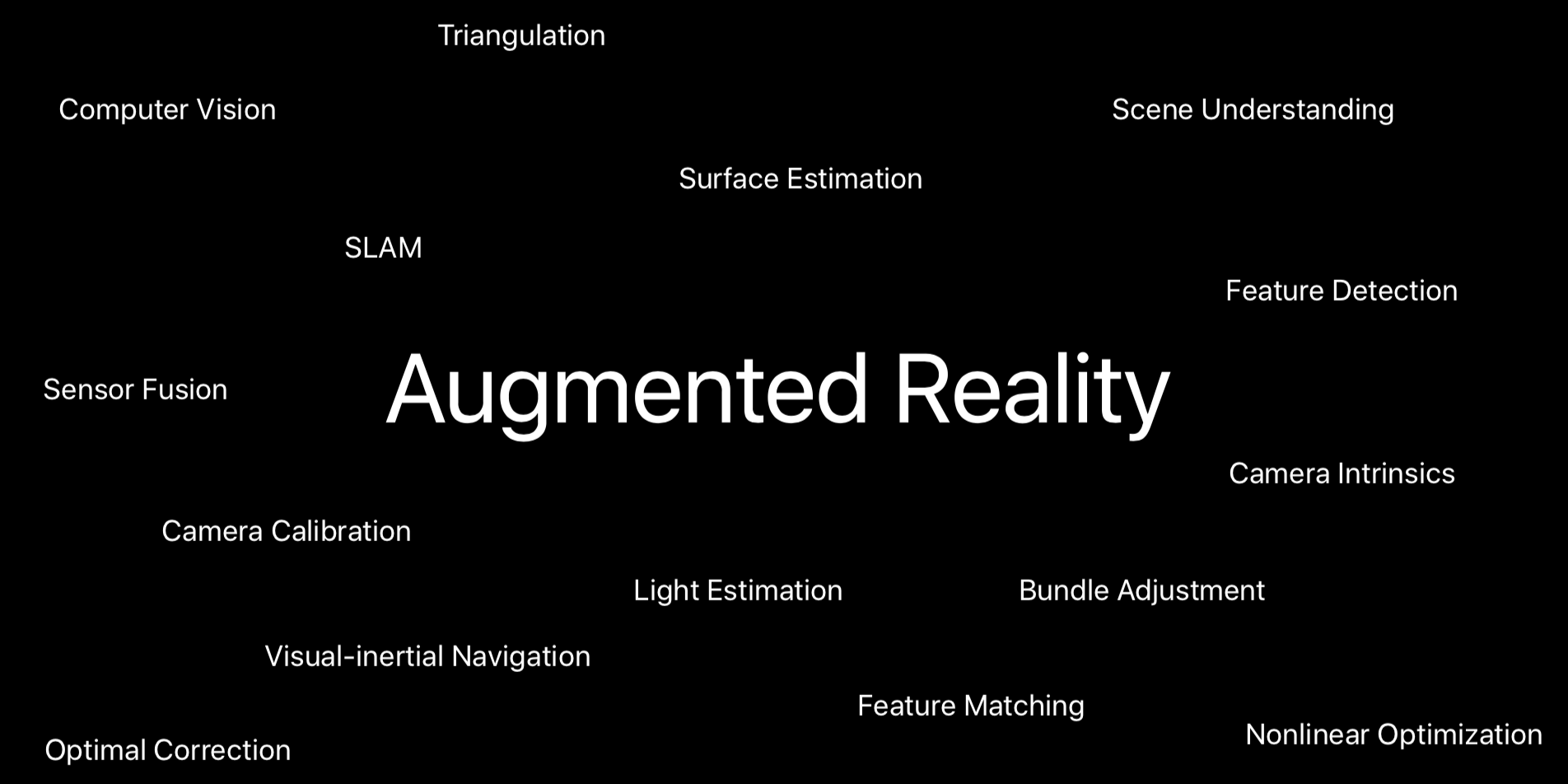

ARKit Related Techniques

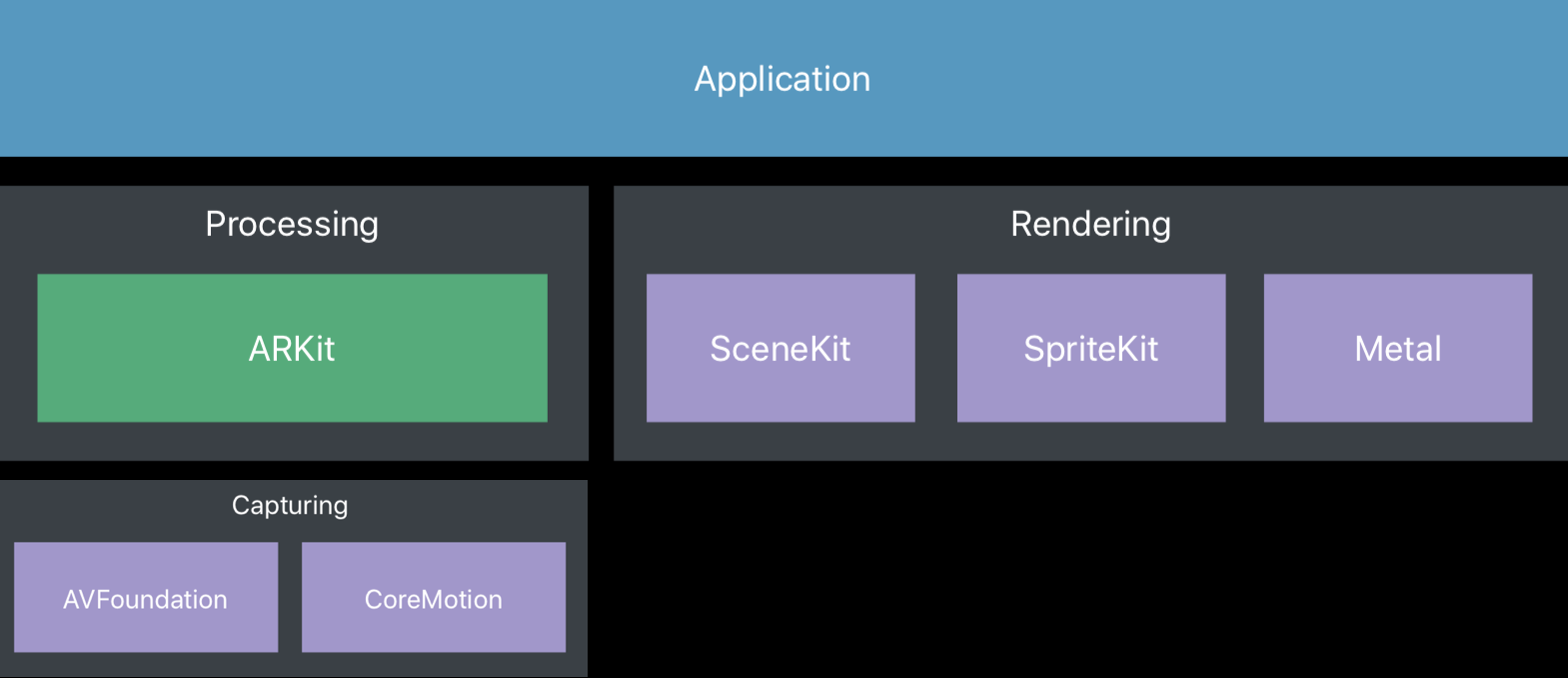

ARKit in iOS System

ARKit Key Classes

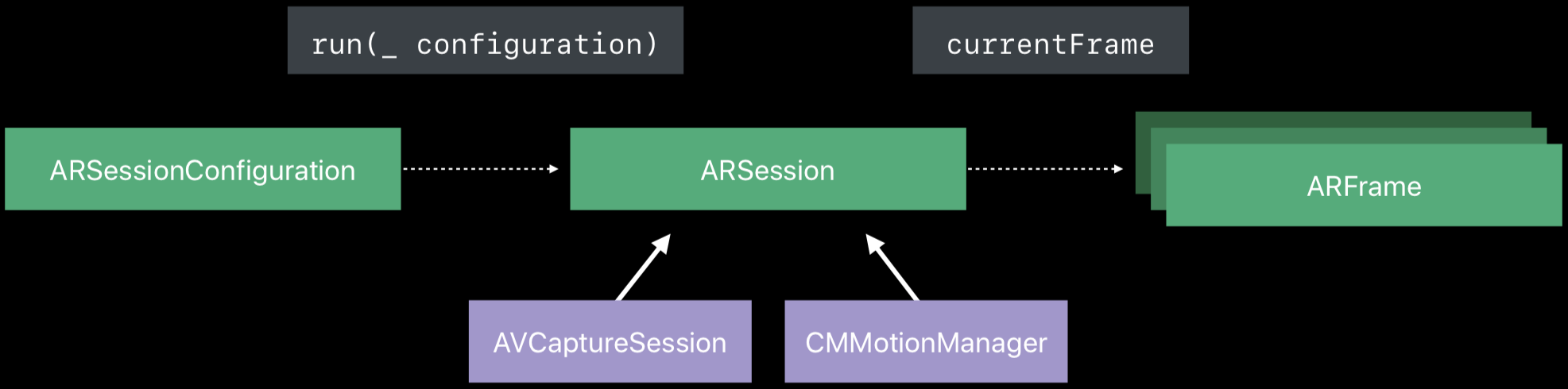

ARSession

An ARSession object coordinates the major processes that ARKit performs on your behalf to create an augmented reality experience. These processes include reading data from the device’s motion sensing hardware, controlling the device’s built-in camera, and performing image analysis on captured camera images. The session synthesizes all of these results to establish a correspondence between the real-world space the device inhabits and a virtual space where you model AR content.

Every AR experience built with ARKit requires a single ARSessionobject. If you use an ARSCNView or ARSKView object to easily build the visual part of your AR experience, the view object includes an ARSession instance. If you build your own renderer for AR content, you’ll need to instantiate and maintain an ARSession object yourself.

Running a session requires a session configuration: an instance of the ARConfiguration class, or its subclass ARWorldTrackingConfiguration. These classes determine how ARKit tracks a device’s position and motion relative to the real world, and thus affect the kinds of AR experiences you can create.

ARConfiguration

ARConfiguration is an abstract class; you do not create or work with instances of this class.

To run an AR session, create an instance of the concrete ARConfiguration subclass that provides the kind of augmented reality experience you want to use in your app or game. Then, set up the configuration object’s properties and pass the configuration to your session’s run(_:options:) method. ARKit includes the following concrete configuration classes:

ARWorldTrackingConfigurationProvides high-quality AR experiences that use the rear-facing camera precisely track a device’s position and orientation and allow plane detection and hit testing. Creating and maintaining this correspondence between spaces requires tracking the device’s motion. TheARWorldTrackingConfigurationclass tracks the device’s movement with six degrees of freedom (6DOF): specifically, the three rotation axes (roll, pitch, and yaw), and three translation axes (movement in x, y, and z).AROrientationTrackingConfigurationProvides basic AR experiences that use the rear-facing camera and track only a device’s orientation. Creating and maintaining this correspondence between spaces requires tracking the device’s motion. TheAROrientationTrackingConfigurationclass tracks the device’s movement with three degrees of freedom (3DOF): specifically, the three rotation axes (roll, pitch, and yaw).AROrientationTrackingConfigurationcannot track movement of the device, and 3DOF tracking does not support plane detection or hit testing.Use 3DOF tracking only as a fallback in situations where 6DOF tracking is temporarily unavailable.

ARFaceTrackingConfigurationProvides AR experiences that use the front-facing camera and track the movement and expressions of the user’s face.

Face tracking is available only on iOS devices with a front-facing TrueDepth camera.

An official example Creating Face-Based AR Experiences demonstrates that you can place and animate 3D content that follows the user’s face and matches facial expressions, using the TrueDepth camera on iPhone X.

More on ARWorldTrackingConfiguration

Refer to About Augmented Reality and ARKit for an official explanation of the world tracking things.

World tracking process can be illustrated as:

![]()

One question here: How does ARKit know how long is 1 meter in the real world? How can Apple’s ARKit (Augmented Reality) do precise measurement with just one camera? is trying to figure this out:

“When an iPhone camera is turned on, it doesn’t have two different images with which to calculate distances. However, a moment after the first image is taken it does have a second image. Thanks to data from the iPhone accelerometer sensors, it can also estimate the difference - from the first image to the second - of the iPhone camera’s 3D position and aim. Now we go back to those “known features” being tracked. For each image the iPhone doesn’t just do this for a single feature, it maps as many features as it can. Aside from doing the triangulation on each of the features in the images, it also does a comparison between the differences in each feature’s relationship to other features in the image. So now, like your brain, the iPhone has two different views of something, knows the approximate angles of focus, knows the distance between the lens position, is tracking known features and their relationship to each other. From this, the iPhone can get a very good approximation of how each feature is positioned in space with relation to the other features, essentially producing a 3D mapping of the space.”

Tracking Quality

To get better tracking quality:

- Uninterrupted sensor data

- Textured environments

- Static scenes

If tracking quality changes, the tracking state will also change:

![]()

And the tracking state changes will be notified by:

1 2 3 4 5 6 7 | |

ARFrame

After world tracking, we can get the 6 DOF of the camera, used for the upcoming rendering. These infos are stored in each ARFrame.

ARFrame owns video image and position tracking information captured as part of an AR session. There are two ways to access ARFrame objects produced by an AR session, depending on whether your app favors a pull or a push design pattern.

- Pull Pattern: get

currentFramefromARSession. - Push Pattern: implement the

session(_:didUpdate:)delegate method, and the session will call it once for each video frame it captures (at 60 frames per second by default).

Key infos in ARFrame:

ARCamera: Information about the camera position and imaging characteristics for a captured video frame in an AR session. GetcamerafromARFrame.ARLightEstimate: Estimated scene lighting information associated with a captured video frame in an AR session. GetlightEstimatefromARFrame.Refer to ARKit by Example — Part 4: Realism - Lighting & PBR for mimicing the environment light.

HitTest for Real World Position

By calling the following method on ARSCNView,

1

| |

we can get an array of ARHitTestResult, which stay at the very position point indicates. The ARHitTestResults are sorted by distance. To call the method, you need to specify the ARHitTestResult.ResultType. There are four kinds of hitTest types:

existingPlane

Return the result type from intersecting with an existing plane anchor.

existingPlaneUsingExtent

Return the result type from intersecting with an existing plane anchor, taking into account the plane’s extent.

estimatedHorizontalPlane

Return the result type from intersecting a horizontal plane estimate, determined for the current frame.

featurePoint

Return the result type from intersecting the nearest feature point.

Display Virtual Object in Real World

Standard View

ARSCNView: A view for displaying AR experiences that augment the camera view with 3D SceneKit content.ARSKView: A view for displaying AR experiences that augment the camera view with 2D SpriteKit content.

Custom View

To display your AR experience in a custom view, you’ll need to:

- Retrieve video frames and tracking information from the session.

- Render those frame images as the backdrop for your view.

- Use the tracking information to position and draw AR content atop the camera image.

Refer to Displaying an AR Experience with Metal.

Best Practices and Limitations

Best Practices

World tracking is an inexact science. This process can often produce impressive accuracy, leading to realistic AR experiences. However, it relies on details of the device’s physical environment that are not always consistent or are difficult to measure in real time without some degree of error. To build high-quality AR experiences, be aware of these caveats and tips.

Refer to About Augmented Reality and ARKit.

Design AR experiences for predictable lighting conditions.

World tracking involves image analysis, which requires a clear image. Tracking quality is reduced when the camera can’t see details, such as when the camera is pointed at a blank wall or the scene is too dark.

Use tracking quality information to provide user feedback.

World tracking correlates image analysis with device motion. ARKit develops a better understanding of the scene if the device is moving, even if the device moves only subtly. Excessive motion—too far, too fast, or shaking too vigorously—results in a blurred image or too much distance for tracking features between video frames, reducing tracking quality. The ARCamera class provides tracking state reason information, which you can use to develop UI that tells a user how to resolve low-quality tracking situations.

Allow time for plane detection to produce clear results, and disable plane detection when you have the results you need.

Plane detection results vary over time—when a plane is first detected, its position and extent may be inaccurate. As the plane remains in the scene over time, ARKit refines its estimate of position and extent. When a large flat surface is in the scene, ARKit may continue changing the plane anchor’s position, extent, and transform after you’ve already used the plane to place content.

Limitations

- For a moving object, ARKit can not give an usable world position of it.

- You can not put a virtual object behind a real object. This leads to some problems, like:

- When an real object move in front of an virtual object, the virtual object will still be displayed in front of the real object.

- You can not hold a virtual object coolly, as the virtual object you are trying to hold can not be behind your fingers.

Two thoughts that may be help on the second limitation:

- Segment the camera image based on the feature point with world position. Draw further real object, and then virtual object, and at last, the nearest real object. However, as the feature point is sparse (performance consideration), some edge detection algorithms are needed for accurate edges of objects.

- Based on the dual camera, we can get depth of each pixel of the camera image. This will help on image segmentation.

AR from Google

Tango is a platform that uses computer vision to give devices the ability to understand their position relative to the world around them. But Tango requires very special hardware to run on. So ARCore comes.

The Tango project will be deprecated on March 1st, 2018. Google is continuing AR development with ARCore, a new platform designed for building augmented reality apps for a broad range of devices without the requirement for specialized hardware.

ARCore is a platform for building augmented reality apps on Android. ARCore is designed to work on a wide variety of qualified Android phones running N and later. During the developer preview, ARCore supports the following devices:

- Google Pixel, Pixel XL, Pixel 2, Pixel 2 XL

- Samsung Galaxy S8 (SM-G950U, SM-G950N, SM-G950F, SM-G950FD, SM-G950W, SM-G950U1)