DR Project

Key words: ARKit, CoreML, SceneKit

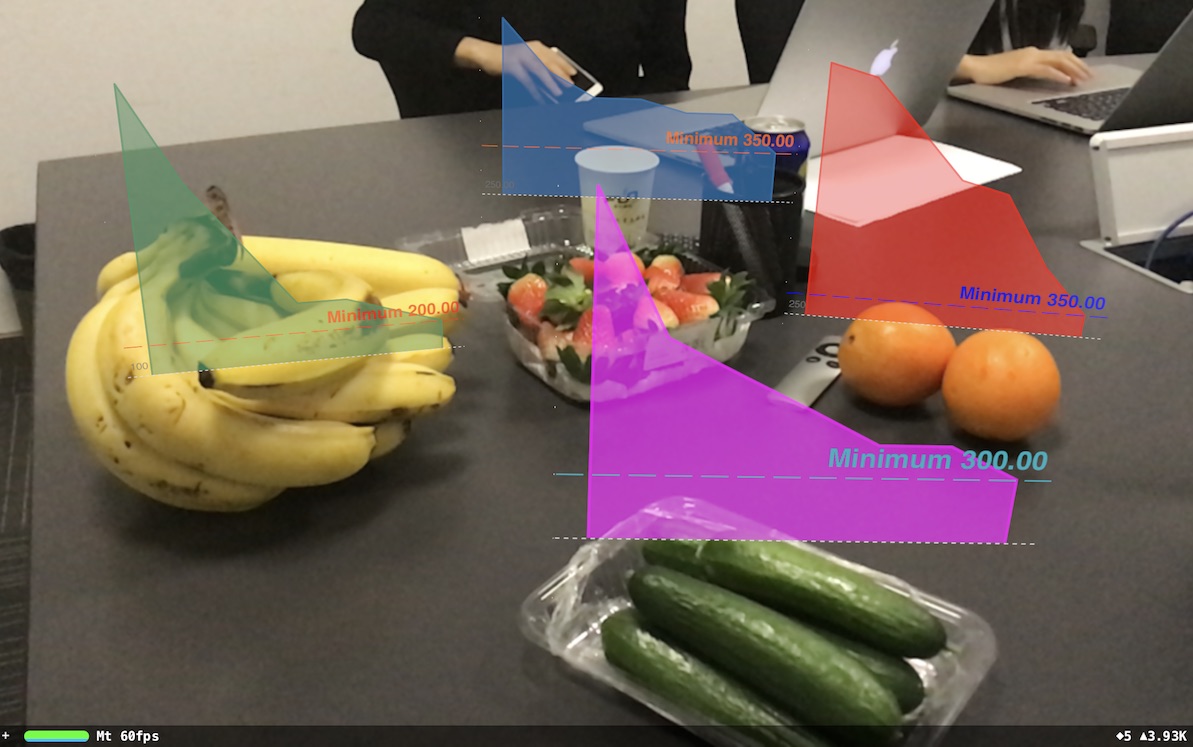

DR (Data to Reality) is a demo for projecting data into reality: Using CoreML for object recognition, and then get the recognized object data and project the data to reality, just above the recognized object. In this process, ARKit helps us to get the real world object coordinate to put the data at, and SceneKit helps us to render the data in reality.

This is a screenshot in demo:

Refer to github Project Data to Reality for demo project. In the github page, the following are told:

- Requirement

- How to Run the Project

- How to Use the Demo

- Project Chart to Reality

- Face Detection

- Face Recognition

Related techniques used

Notes on the Demo

As this is a rough demo, it need some enhancements:

- Only four kinds of fruits are supported: banana, orange, cucumber and strawberry. But for anything recognized by Inceptionv3.mlmodel, we can add a sphere and the name just at the world position of the object. (Set

showRecognizedResultNearbytotrue.) - The chart data of the four kinds of fruits are images exported from other apps.

- For face detection on iphone, rotate the device to left by 90 degrees to make it work on landscape. This is an issue need to be fixed.

- Face recognition needs a trained face recognition model, called FaceRecognition.mlmodel.

- Face recognition request doesn’t crop the image from camera according to the face detection result. This should be done to make face recognition more robust.